In today’s rapidly advancing digital age, the rise of artificial intelligence and machine learning has brought many modern conveniences, from virtual assistants to self-driving cars. However, it has also given rise to a new and concerning cybersecurity threat — deepfake scams!

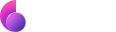

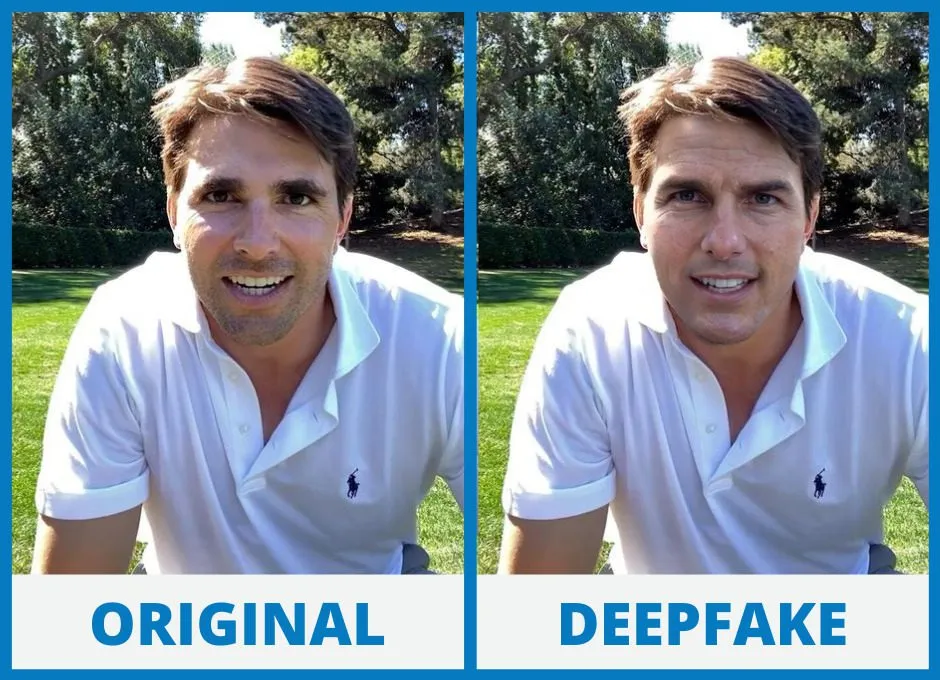

Deepfakes, as they’re commonly known, are AI-generated videos or images that have been manipulated to show a real person doing or saying something which they never actually did. These deepfake scams can range from seemingly harmless pranks to targeted attacks with malicious intent.

In this quick guide, we’ll explore the dangers of deepfake scams, how they work, and what you can do to protect yourself.

What is deepfake?

But first things first — what exactly is a deepfake, and how does it work? To defend against this threat, a good understanding of the mechanism behind deepfakes is crucial.

The term deepfake is derived from two words — “deep learning” and “fake”. The former refers to the use of artificial neural networks, a type of AI, to create fake media by analyzing and synthesizing existing images or videos.

To put it simply, deepfake algorithms are trained on a large dataset of real footage to learn how to manipulate the facial expressions, movements and voice of an individual in order to create a new video or audio recording which appears authentic. This means that with enough data, anyone can potentially be targeted for a deepfake scam.

As you might imagine, this technology has significant potential for abuse, particularly in the hands of malicious actors. From fraud and identity theft to political propaganda and cyberbullying, the consequences of deepfake scams can be far-reaching.

Deepfake scams statistics

To show you just how much of a threat deepfake scams have become, here are some alarming statistics:

- According to a Sumsub report, identity fraud caused by deepfakes saw a dramatic rise in the U.S. and Canada from 2022 to Q1 2023. In the U.S., such fraud incidents increased from 0.2% to 2.6%, while in Canada, they surged from 0.1% to 4.6%.

- A study by Deep Instinct (a cybersecurity company) found that 46% of organizations recognize generative AI as a cybersecurity threat, heightening their vulnerabilities.

- Redline (a deep-tech startup) estimates that approximately 500,000 voice and deepfake videos will be shared on social media platforms in 2023.

- A study from Onfido (a leading identity verification and authentication platform) indicates a 3,100% surge in deepfake-related phishing and fraud incidents from 2022 to 2023 as generative AI tools advance and deepfake technology becomes more sophisticated and accessible.

- A report published by EuroNews (a leading European news channel) stated estimated losses from deepfake scams amounted to $12.5 billion globally in 2024 alone.

The above statistics are but a small picture of the growing deepfake threat. As technology continues to advance, the potential for more sophisticated and convincing deepfakes becomes a very real possibility.

Deepfake dangers

Now to better understand the dangers of deepfake scams, let’s take a closer look at some specific scenarios where this technology is being exploited for malicious purposes.

Financial fraud

Among the most significant dangers posed by deepfake scams is financial fraud. Since the technology behind it has made it easier for scammers to dupe unsuspecting individuals into sharing sensitive information or making financial transactions, many people have fallen victim to such scams.

For example, scammers can create a deepfake of a bank representative or someone from the victim’s personal contact list, asking for sensitive information or money transfers. This can lead to identity theft and financial losses.

Reputation damage

In addition to financial fraud, deepfakes can also cause significant harm to an individual’s or organization’s reputation.

With the ability to create seemingly authentic videos and audio recordings, malicious actors can spread false information or damaging content that tarnishes one’s character or credibility. This not only affects the victim personally but can also have detrimental effects on a victim’s professional life.

Political Manipulation

The potential impact of deepfakes goes beyond personal and financial harm; it can also have severe consequences on society as a whole. In particular, deepfake technology can be used to manipulate public opinion and influence political outcomes.

For instance, deepfakes of politicians or world leaders making controversial statements could sway voters or create social unrest.

How to spot a Deepfake?

Insights on deepfake statistics and its dangers are well and good, but how do you actually know if you’re dealing with one? While deepfakes can seem convincingly real, they often have tell-tale signs that can help you spot them.

Here are a few signs of a deepfake that you can look out for:

- Blurry or distorted images and videos

- Inconsistent lighting or shadows in the footage

- Unnatural facial expressions or movements

- Audio that is slightly off-sync with the video

- Unusual background sounds or lack thereof

Basically anything that seems “off” or doesn’t quite match up with reality could be an indication of a deepfake. It’s also important to trust your instincts and cross-check information from multiple sources before believing something that seems suspicious.

Below is a good example of a deepfake video published on Youtube (for educational purposes). See if you can spot any of the signs mentioned above:

How to protect yourself from deepfake?

So let’s say that you have spotted a deepfake, or someone has attempted to scam you using this technology. What can you do to protect yourself?

Here are a few tips to keep in mind:

- Be cautious when sharing personal information or making financial transactions online, even if it’s with someone you know. Always verify the authenticity of any request before taking action.

- Use reliable identity verification and authentication services to protect your personal information.

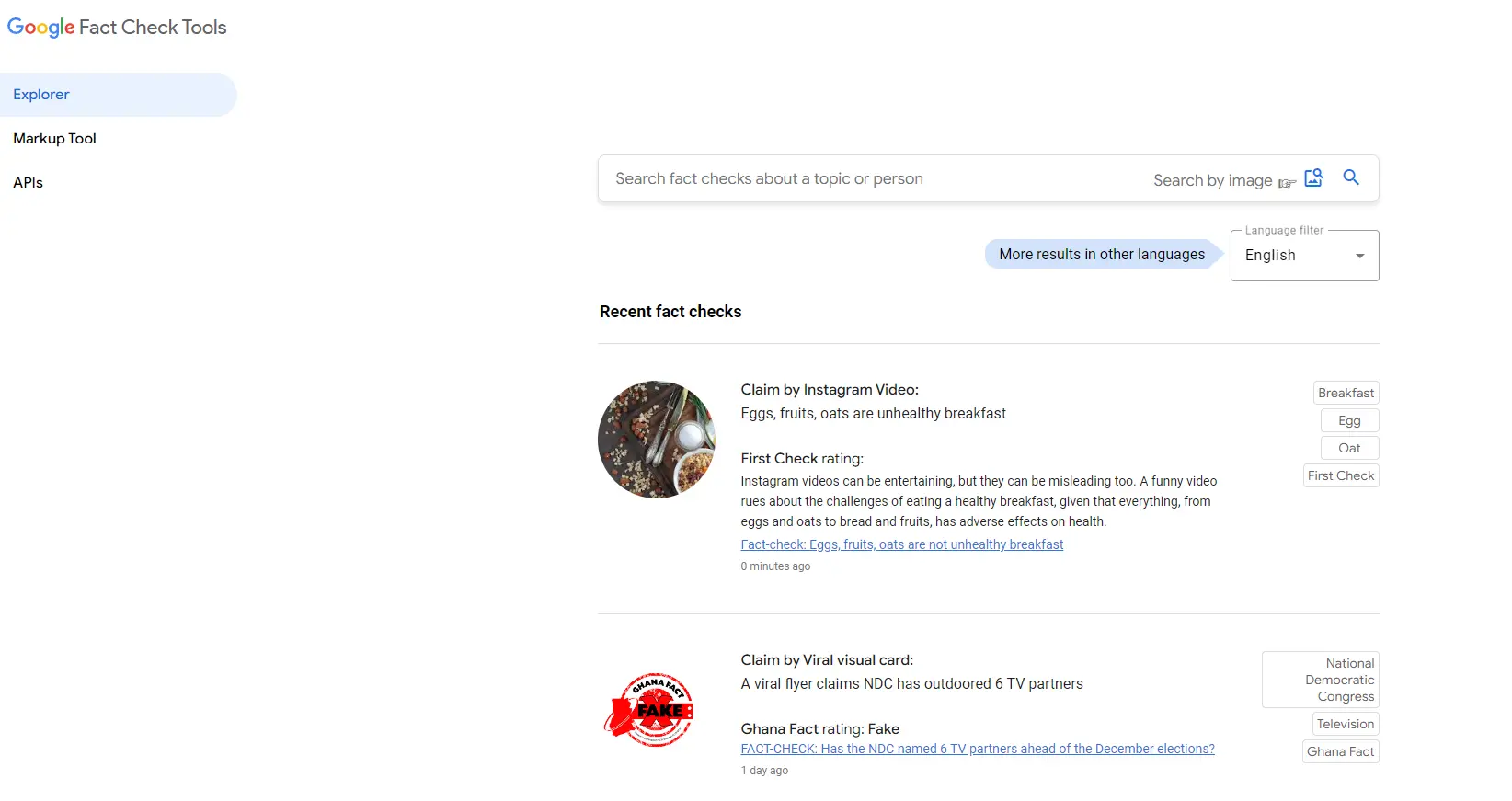

- Use fact-checking tools: Fact-checking tools like Google’s Fact Check Explorer can help identify deepfakes and other forms of online misinformation.

- Report suspicious content: If you come across a deepfake or any other form of fraudulent content, report it immediately to the relevant authorities or social media platforms. This can prevent others from falling victim to the same deepfake dangers.

FAQs

How to detect deepfakes?

There are several signs to look out for when trying to detect a deepfake. These include blurry or distorted images and videos, unnatural facial expressions or movements, inconsistent lighting or shadows, and audio that is slightly off-sync with the video.

What are the risks of deepfakes?

The risks of deepfakes include financial fraud, reputational damage and political manipulation. They can also contribute to the spread of misinformation and conspiracy theories.

How can I protect myself from deepfakes?

Deepfakes can pose significant risks, including financial fraud, reputation damage and political manipulation. They can deceive individuals and organizations, leading to monetary losses, credibility issues and societal unrest. The sophistication of this technology makes it a potent tool for malicious actors.

Are deepfakes a cyber crime?

Yes, deepfakes can constitute a cyber crime when used for malicious activities such as fraud, identity theft, defamation or spreading misinformation. Their misuse can result in legal actions against perpetrators under various cyber crime and fraud-related laws.